DevOpsCon 2018 Zusammenfassung

Our development team attended this year’s DevOpsCon in Berlin. DevOpsCon is one of the major conventions in the field of development and operations. The event focuses on continuous delivery, microservices, Docker, cloud environments and lean business applications. In addition to interesting talks and keynotes, a hosted exposition allows you to directly interact with representatives of the industries leader.

The growing degree of digital transformation does not only affect software products themselves, furthermore the entire development, operation and deployment cycle has to be automated and improved accordingly. Shortened delivery times, constantly changing requirements and rapidly growing environments force software vendors to choose sophisticated technologies to ensure software integrity and quality within appropriate and scalable enterprise infrastructures. Solving these challenges requires engineers with a focus on DevOps, a field of growing importance.

What does DevOps mean exactly?

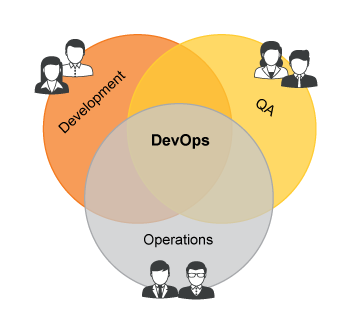

The buzzword “DevOps” describes practices within the software delivery process, which aim to improve speed, reliability and security. Streaming feedback from production to development, regular updates of applications and infrastructure, as well as on demand scaling and highly automated workflows, characterize the DevOps routine. One of the most important intentions is to improve the collaboration between development and operations. It is the combination of cultural philosophies, practices, and tools that increase an organization’s ability to deliver applications and services at high velocity. At the end of the day it comes down to:

Docker – a container is shaping IT landscapes around the world

Docker has quite likely been one of the most impactful technologies over the past few years. Companies use it internally to run their infrastructure, write code, execute tests and set up their productive environments. Regardless of whether the virtual containers run on-premises or in the cloud, the provided onboard toolsets for administrative activities are very limited. In complex environments with a large number of containers, the system is no longer manageable in an appropriate manner. An overall service orchestration system has therefore become a necessity and various solutions have emerged to take on that task.

Kubernetes and large-scale service orchestration

A lot of the talks at DevOpsCon revolved around Kubernetes or at least mentioned it positively. It seems likely that this current trend will persist for the future of containerized application management. Kubernetes is an open source system that allows automated deployments, application scaling and manages grouped containers in logical units. Basically, it adds an abstraction layer to the infrastructure and reduces the need for direct interactions with resources. For example, you can manage thousands of virtual containers from different locations (cloud and/or on-premises), without any required knowledge about container technology and platforms in the background. In addition to all the advantages, the basic installation and configuration of the system is very complex and tough. Because of that, more and more well-known companies (e.g. Suse) develop their own commercial plattforms based on Kubernetes, which help to eleviate these difficulties and make it easier for users to deploy such systems.

Measuring the unknown

One of the most important tasks in the daily operations of technology companies today is application and log monitoring. The goal is to keep track of the health of software environments. An early detection of performance bottlenecks or malfunctions within applications are basic requirements in order to achieve smooth, flawless operations.

There are a lot of things that can be measured, but not all metrics are meaningful on their own. Other values are hard to obtain and thus even harder to measure. You’ll likely get two very different opinions on how to address this topic if you ask experts:

- The first group shares the idea of focusing on basics and selecting only meaningful metrics. You should ask yourself, if a specific metric really answers a necessary question. Furthermore, does the level of entropy allow a strong data correlation or does it lead to wrong assumptions? For example, the number of closed QA tickets does not necessarily mean the software quality has increased by the same magnitude. If a metric was identified as less meaningful for your own purpose, it might still be meaningful to your customer. If so, add it to your list and be delighted, you just added business value!

- The second group of experts holds the opinion that more is more. Every information you can obtain can be useful in the right context. Maybe you don’t know yet, which metric and what information can serve you well in the future. Being and staying prepared is the main benefit of this ideology. Both strategies hold their own strengths and weaknesses, but in the end a deliberate mix of both approaches could prove to be most effective.

Maintaining security in complex IT environments

Another key topic was security. While technologies like Docker were mostly meant for development and application testing purposes only in the past, they are ready for productive use today. More and more companies use the advantages of these technologies to build and align their continuous delivery process based on that technology stack. Regarding security, such container-based infrastructures come with some extra complexity and new requirements. Security objectives, such as availability, are much easier achieved in the world of containers, thanks to the ability of simple scaling. On the other hand, the network and application configuration itself tends to increase the level of complexity. Especially in the context of cloud environments, operators need to be very careful. The key security-related topics lie in the area of permissions and storage management, as well as in network and system reliabilty. Companies need to keep an eye on these aspects and prepare for the increasing level of complexity.

Our conclusion – two days stack-full of valuable insights

Both days in Berlin have been very interesting for our team. Most talks gave a good impression of how other companies address certain challenges and how they experienced working with specific tools in the context of DevOps. Visiting the exhibiton and talking to colleagues and experts, we were able to identify new technologies, products and potential technology partners. As a result, the engineers at NTS Retail will use those newly acquired insights to initiate evaluation projects. The goal is to implement internal prototypes in order to check how certain technoloiges are suited for practical use. In the long term, our customers will benefit from fast, flexible, high quality solutions built on a state-of-the-art technology stack.